Abstract: AI video fashions superior considerably in 2025, notably in avatar expressiveness, enabling me to supply a lot better movies than in 2024. Additional enhancements are wanted in 2026 to appreciate the potential of particular person creators. Listed here are my 10 hottest movies, as decided by viewers clicks and viewing durations.

(Nano Banana Professional)

I assumed that 2024 was the 12 months of AI video and that 2025 can be the 12 months of AI brokers. There definitely was robust progress in AI brokers in 2025, however they’re not fairly prepared for mainstream use but, at the same time as enterprise use instances are beginning to emerge.

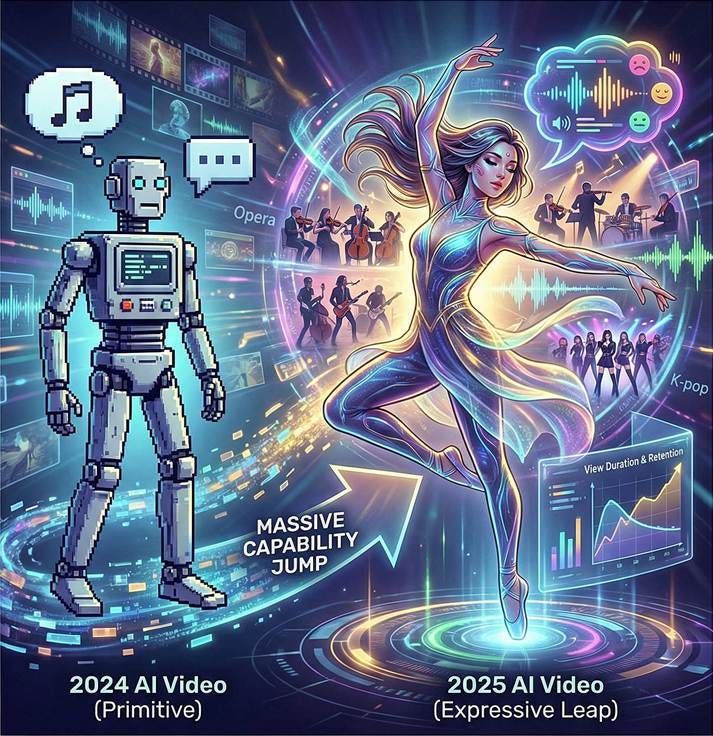

AI video did certainly do effectively in 2024, as proven by my checklist of prime 10 movies of 2024 and specific my music video “High 10 UX Articles of 2024” made in late December 2024. However the 2024 progress in AI video was nothing in comparison with the leaps in AI video capabilities realized in 2025. On reflection, that December 2024 video is sort of primitive in comparison with the movies I launched in December 2025 (for instance, “Outdated Employees Keep Inventive With AI”).

To display the progress in AI video this 12 months, I’ve made a highlights reel with clips from my greatest music movies launched from January to December 2025. The clips are offered in chronological sequence, permitting you to guage the enhancements.

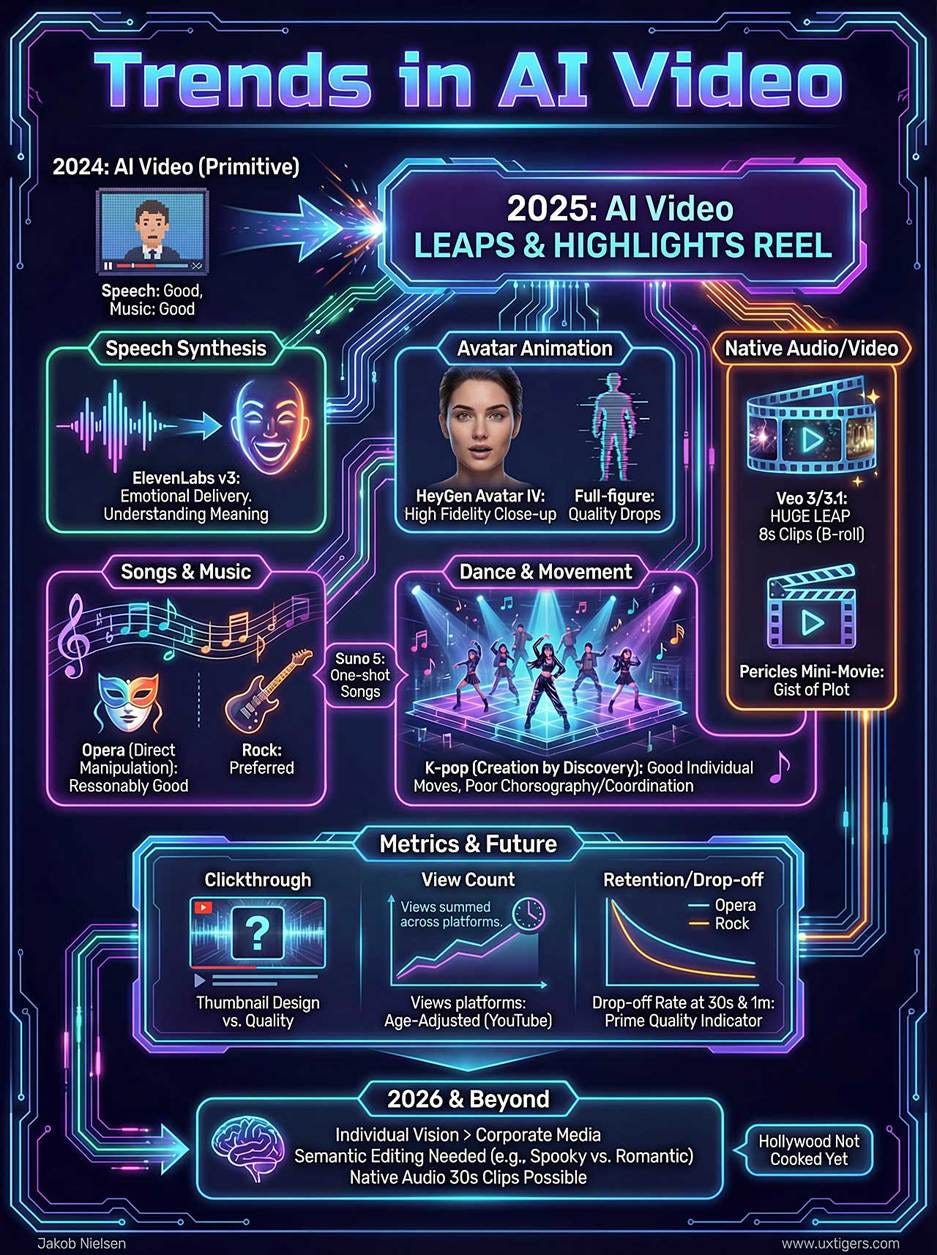

I decide the speed of enchancment to be as follows for the totally different elements that go into making AI movies:

-

Speech synthesis: solely modest enhancements, as a result of speech was already good by late 2024. The most important enchancment has been in fashions like ElevenLabs v3 that use a language mannequin to know the that means of the phrases it’s being requested to talk, permitting it to regulate the emotional supply of its traces. (For an instance, watch my explainer video “Sluggish AI: Consumer Management for Lengthy Duties Defined in 5 Minutes.”)

-

Songs and music: solely modest enhancements, as a result of they had been additionally already good by late 2024. One first: I used to be lastly in a position to make an operatic space that sounds fairly good (no Mozart, although): “Direct Manipulation.” At the same time as not too long ago because the summer time of 2025, all my makes an attempt at opera appeared like a nasty Broadway musical, not like a correct opera, and due to this fact I by no means revealed them.

-

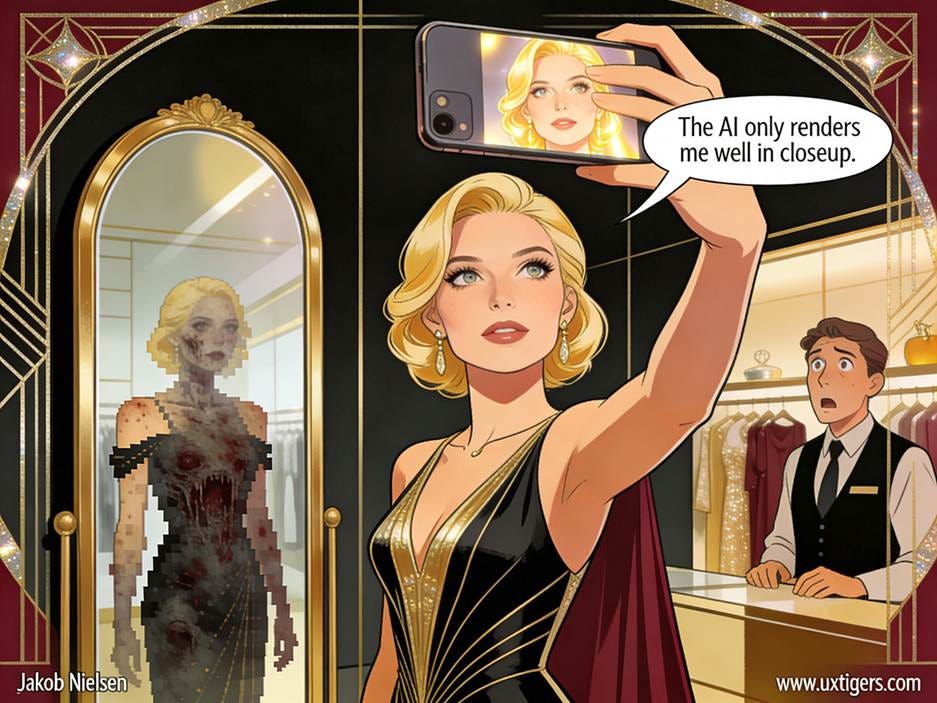

Avatar animation: large positive factors, with fashions like HeyGen Avatar IV now able to pretty excessive constancy, so long as we stick with speaking head close-up views. High quality drops for full-figure presenters. (For example, watch my music video “Creation by Discovery: Navigating Latent Design House,” and observe how a lot better the singer performs within the closeup cuts.)

Present AI avatar fashions are notably higher at rendering close-up views than full-figure avatars. (Seedream 4.5)

-

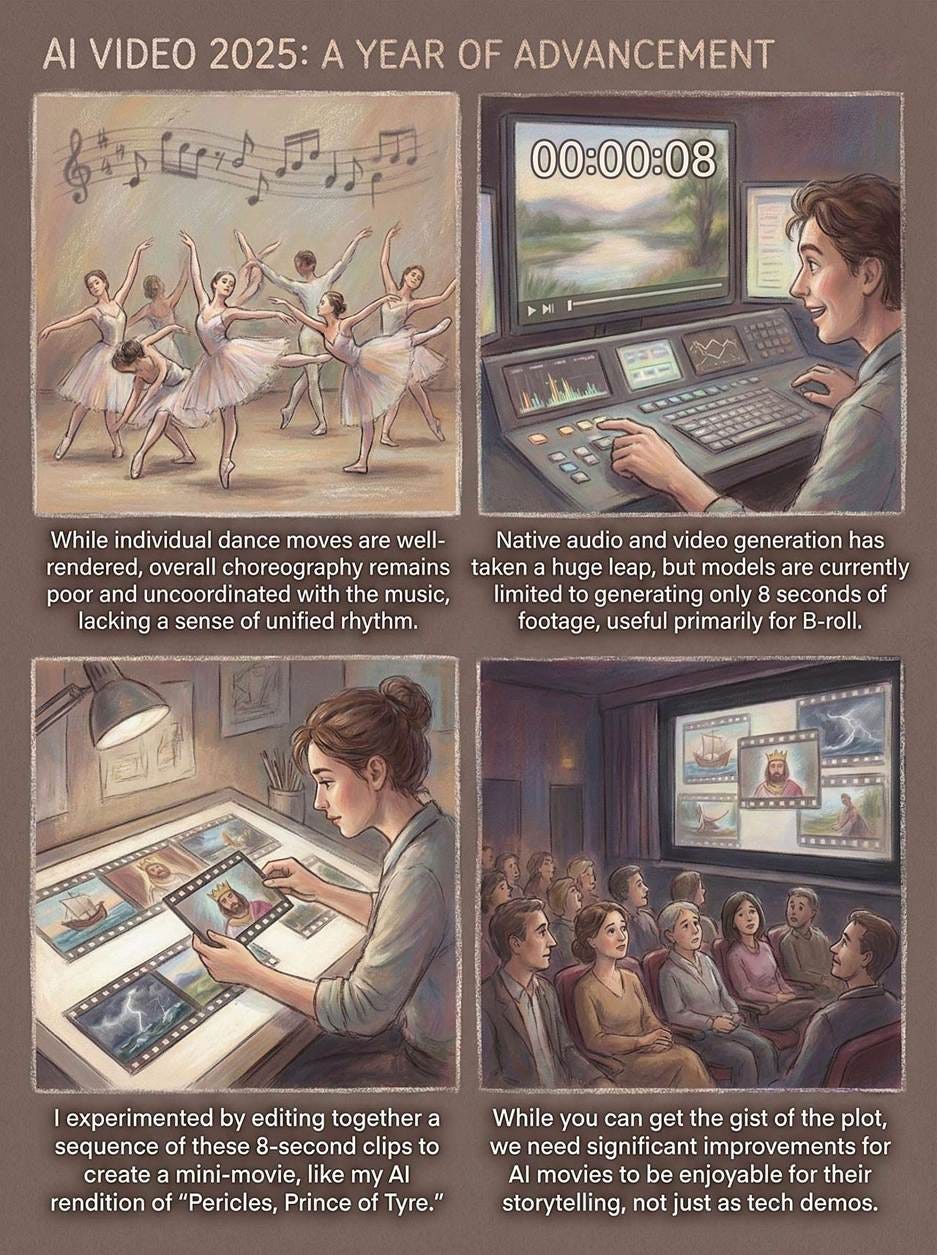

Dance and motion animation: a lot better now, however nonetheless removed from what’s wanted. For instance, watch the dance sequences in “Creation by Discovery: Navigating Latent Design House,” which was my current try at making a Okay-pop efficiency. Particular person dance strikes are normally good, however the general choreography is poor and by no means as much as JYP requirements. My guess is that the video fashions are skilled on quite a few Okay-pop performances, so that they have the person strikes down pat and may carry out a synchronized group dance effectively. However they haven’t any idea of coordinating the dance with the music. The identical is true for the singers’ actions or the musicians’ use of their devices. Appears to be like effective if the music is muted, however if you play a tune as supposed, you may see that the picture and the audio aren’t coordinated, aside from the lip synch.

-

Native audio with video technology: An enormous leap ahead with Veo 3 (and now 3.1), however the mannequin solely generates 8 seconds of video, that means that it’s solely helpful for B-roll, not for full films, music movies, or explainers. As an experiment, I did edit collectively a sequence of 8-second clips for my AI rendition of Shakespeare’s most obscure play, “Pericles, Prince of Tyre.” The ensuing mini-movie does help you get the gist of the plot, however I would be the first to confess that we want a lot better for AI films to be pleasant for his or her storytelling, moderately than appreciated as demos of the know-how.

AI video developments confirmed marked enhancements in all areas. (Nano Banana Professional)

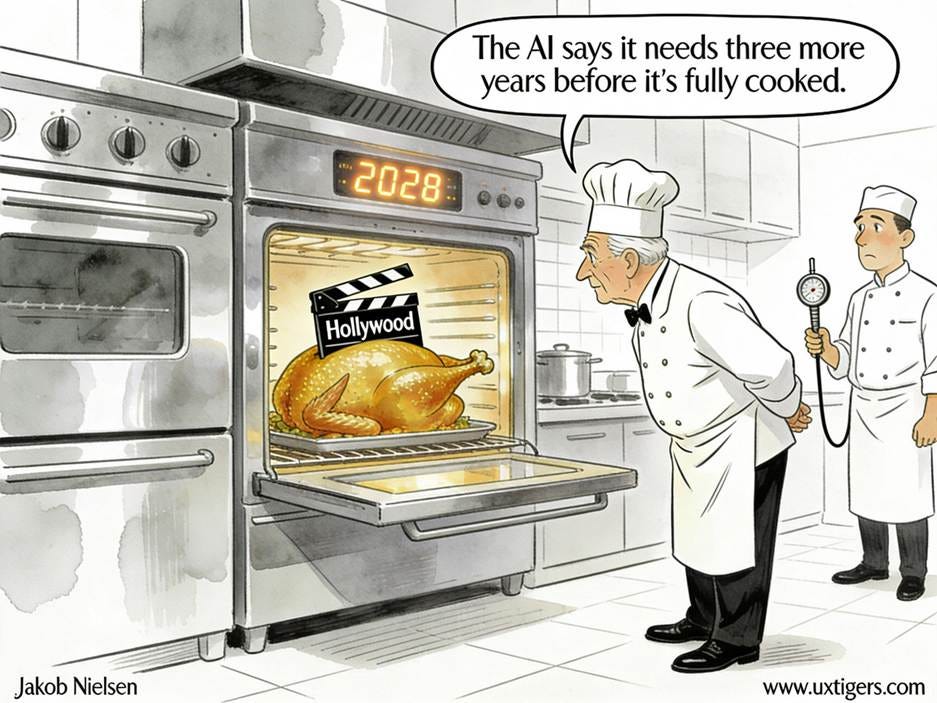

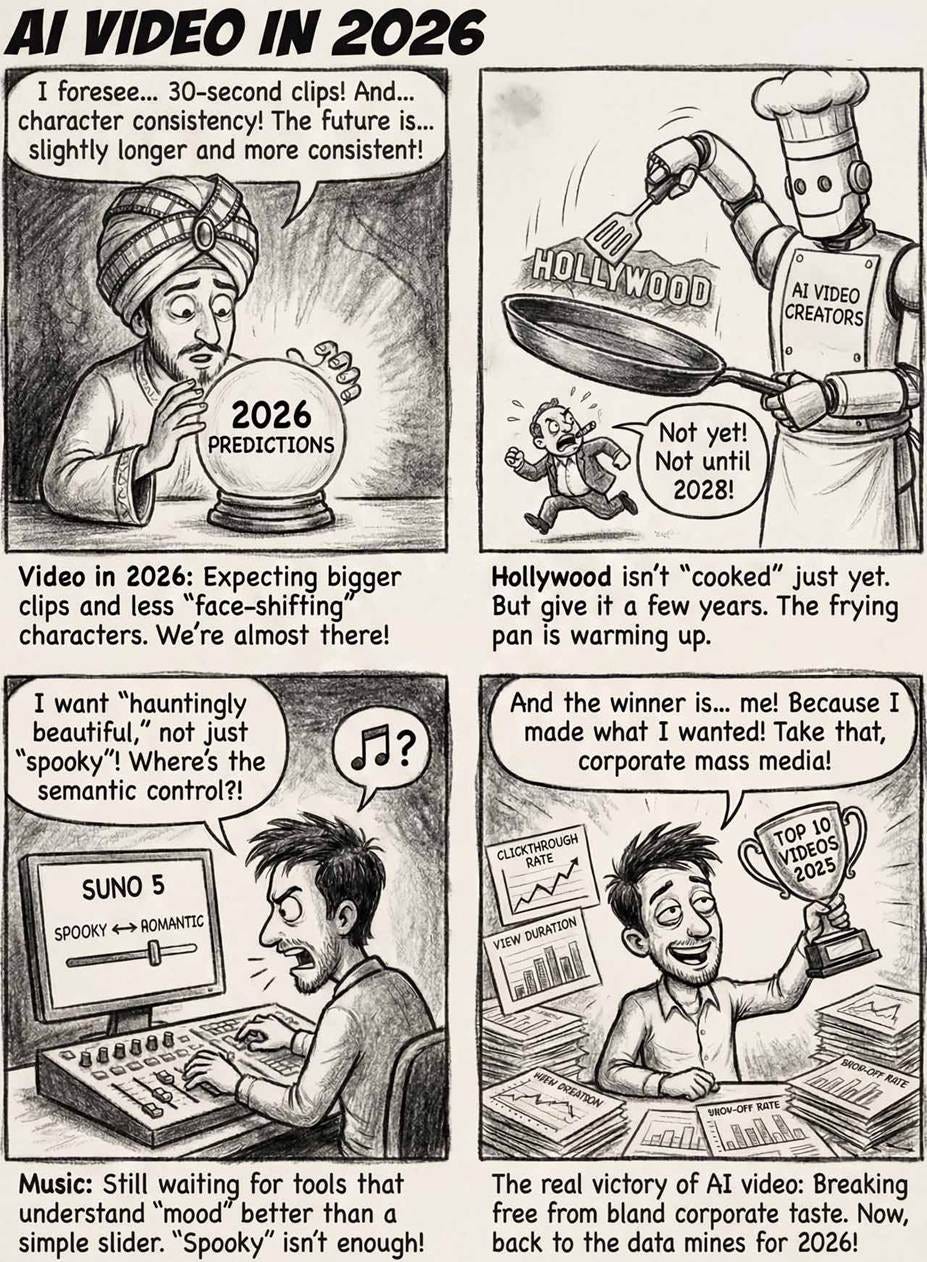

Opposite to what some AI influencers say, I don’t suppose that “Hollywood is cooked” but, and it received’t be cooked in 2026 both. We’ll positively see greater and greater elements of mainstream characteristic movies and TV exhibits made with AI, however most likely not absolutely made with AI till perhaps 2028. For now, the perfect use of serious AI by a legacy studio appears to be Amazon’s sequence Home of David, notably in battle scenes and different particular results. (Fascinating that I confer with Amazon as a legacy studio, however after their acquisition of MGM and continued releases of fairly conventional movies and TV exhibits with a really conventional “studio”-like manufacturing course of, I believe that is applicable, particularly to tell apart Amazon from the rising impartial creators.)

Conventional Hollywood movie manufacturing will not be cooked but, however by 2028, I count on all main studios to both make the transition to AI or really change into cooked. (Seedream 4.5)

I do count on robust enhancements in all components of the AI video pipeline, particularly within the native audio phase, the place you get a full clip in a single shot moderately than having to generate the picture and audio sides individually. 30-second clips are a practical risk by the tip of 2026.

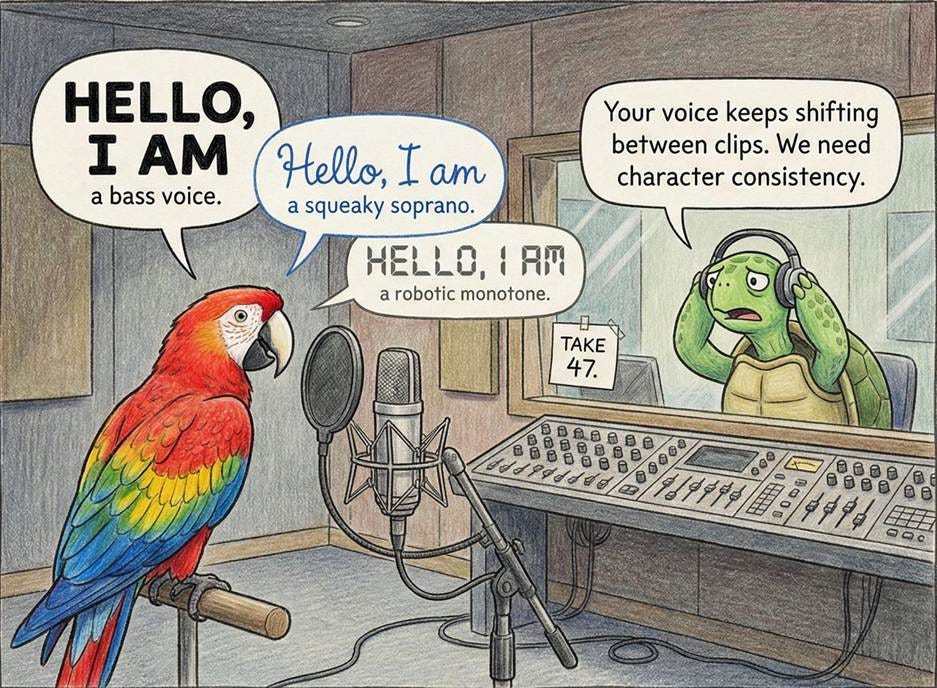

Character consistency from one clip to the subsequent is already doable for the character’s visible look by importing nonetheless photos as references for the video mannequin. (See my video “Aphrodite Explains Usability” for an instance made with Veo 3.1.) The character’s voice at present shifts from one clip to the subsequent, that means that may’t droop disbelief and expertise a sequence of cuts as a single development as we’ve had in manually-made films since D. W. Griffith superior cross‑chopping and continuity modifying by systematizing them right into a coherent “movie language” to maintain lengthy, complicated narrative options moderately than being perceived as disconnected quick scenes. AI voice consistency in 2026? I believe it’s extra possible than not, given the demand.

At present, the identical character sounds totally different throughout clips, even when generated by the identical AI video mannequin. (Nano Banana Professional)

Music is required each for music movies and to counterpoint the soundtrack of different movies. One-shot songs from Suno 5 are already good. I definitely faucet my ft extra to my very own songs (made with Suno) than I do when listening to any chart-topper. And I rewatch my very own music movies with pleasure many instances greater than I watch any million-view business music video, even from my favourite Okay-pop label (JYP Leisure). In fact, it is because I make precisely what I like, however that’s the purpose about AI-supported creation: we’re now not restricted by company mass media and its bland style. As a substitute, all people can pursue their very own particular person imaginative and prescient and create what they like.

Music fashions nonetheless present a reasonably restricted means to navigate the latent design area. Suno not too long ago added equalizer (EQ) modifying to music tracks, however this characteristic looks as if a really limiting pre-AI method to music creation: you may differ the depth of the sound in numerous frequency bands. As a substitute, we want semantic modifying options. At a minimal, the power to request issues like making the drums softer or emphasizing the woodwinds, as a symphony orchestra conductor would possibly do to realize a spooky or supernatural impact for a sure scene in a ballet. (Considering of you, Giselle.) Nevertheless, true semantic modifying would permit the consumer to specify the next stage of intent (e.g., spooky vs. romantic temper) to navigate the latent area alongside significant vectors. This instance is for music, as a result of I need to vent about Suno’s misguided new characteristic, however the identical level applies to any of the constituent media types that come collectively for a full video. (Speech, motion, dance, motion scenes, common appearing, and so on.)

In sum: robust advances in AI video in 2025, nevertheless it’s not there but for bold tasks. We are able to style the long run, although. (Nano Banana Professional)

In deciding the checklist of my “prime” movies for 2025, I thought-about a number of metrics:

-

Clickthrough charge. That is extra concerning the thumbnail design than concerning the high quality of the video on the different finish of the clicking. Clickthrough charges are life and blood for creators who stay by their views, as a result of no video view occurs until the consumer clicks the thumbnail (or different hyperlink) first. Nevertheless, despite the fact that I just like the thumbnails I’ve been making these days, I don’t rating super-high clickthrough charges as a result of I refuse to interact in overused YouTube tropes, such because the exaggeratedly astonished face closeup. (These thumbnail types are overused precisely as a result of they work and ship clicks: human brains have extremely many neurons dedicated to face recognition and emotion recognition.)

90% of YouTube thumbnails appear to have come from the identical casting name that rejected anyone who didn’t have the identical signature look of maximum astonishment. They’re designed that method as a result of this look works. (Seedream 4.5)

-

View depend. It is a extra significant metric, as a result of the assorted providers normally solely file a “view” if the consumer doesn’t simply click on by from the thumbnail but in addition continues to observe the video for a while. (Even when not essentially to the tip.) A horrible video with a compelling thumbnail can have a excessive click on depend however a low view depend. I used views as a serious element of my choice course of, with the next modification: I summed views throughout all of the platforms the place I publish my movies (at present YouTube, LinkedIn, Instagram, and X), however with an age-related modification for the YouTube numbers. The older the video, the extra time it has needed to rack up YouTube views, whereas the social media platforms solely expose a video for just a few days, that means that previous movies don’t accumulate extra views than new movies there.

-

View period. It is a prime indicator of video high quality: if folks watch longer, they have to prefer it extra.

View period could be measured in some ways. YouTube stories two metrics: common view size and share of the video watched. Neither is a good metric. The typical size can solely ever be excessive for lengthy movies. For instance, my video “Aphrodite Explains Usability” solely runs for 41 seconds, making it not possible for it to even rating a full minute in common viewing time, even when folks prefer it sufficient to observe your complete factor. Alternatively, “Transformative AI (TIA): Eventualities for Our Future Economic system” is an 8-minute video, and it’s empirically confirmed that only a few on-line customers proceed watching a video for that lengthy, even when it’s good. Its common view period is 4 minutes 3 seconds, so it handily beats Aphrodite, who by no means had an opportunity.

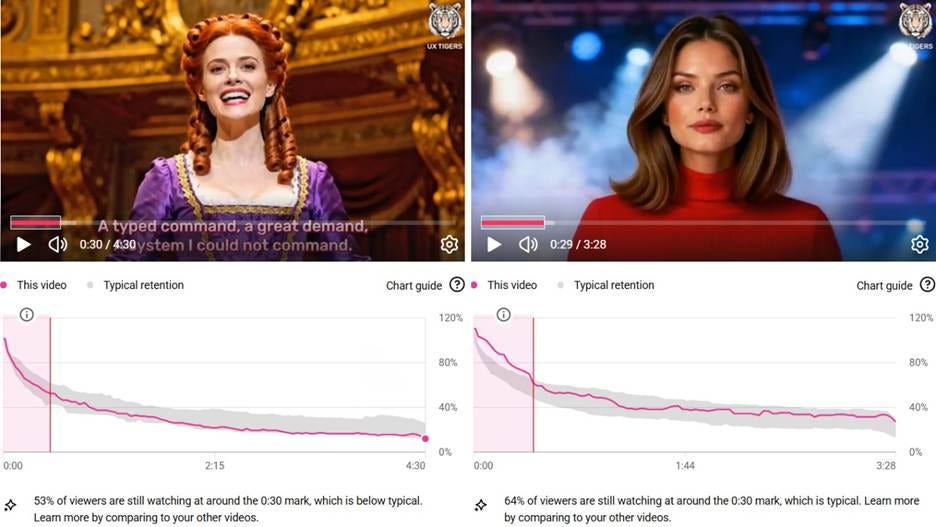

I desire a 3rd metric: the drop-off charge. YouTube tells you what number of viewers are nonetheless watching after 30 seconds: 49% for Aphrodite and 68% for Transformative AI. Now we’re speaking: the second video wins on this honest metric the place any-length video (above 30 seconds, that’s) has an equal probability.

Evaluating the viewing retention curves for 2 variations of my Direct Manipulation tune: opera model left and rock model proper. Individuals watched the rock model longer. (In fact, I do know that the mainstream viewers doesn’t like opera, however I do, so I reserve the appropriate to make extra operas sooner or later.)

By default, YouTube stories retention at 30 seconds, however you will get the quantity at different factors by dragging alongside the retention curve. I additionally wish to calculate what share of these individuals who watched on the 0:30 mark are nonetheless watching after a full minute: these are the actual followers who really get pleasure from that video.

Within the instance I confirmed right here, these numbers are:

-

Opera model: 53% watching after 30 secs, 38% watching after 1 minute. Thus, 72% of the half-minute viewers caught with the video for a full minute.

-

Rock model: 64% watching after 30 secs, 49% watching after 1 minute. Thus, 77% of the half-minute viewers continued waiting for a full minute.

Each metrics present that individuals most well-liked the rock model.

In 2026, AI video will possible understand extra of its potential to empower impartial creators. The longer-term future might be extra revolutionary, pivoting from linear storytelling to worldbuilding, wherein customers shift from passive observers to members immersed in an setting specified by the creator. (Nano Banana Professional)

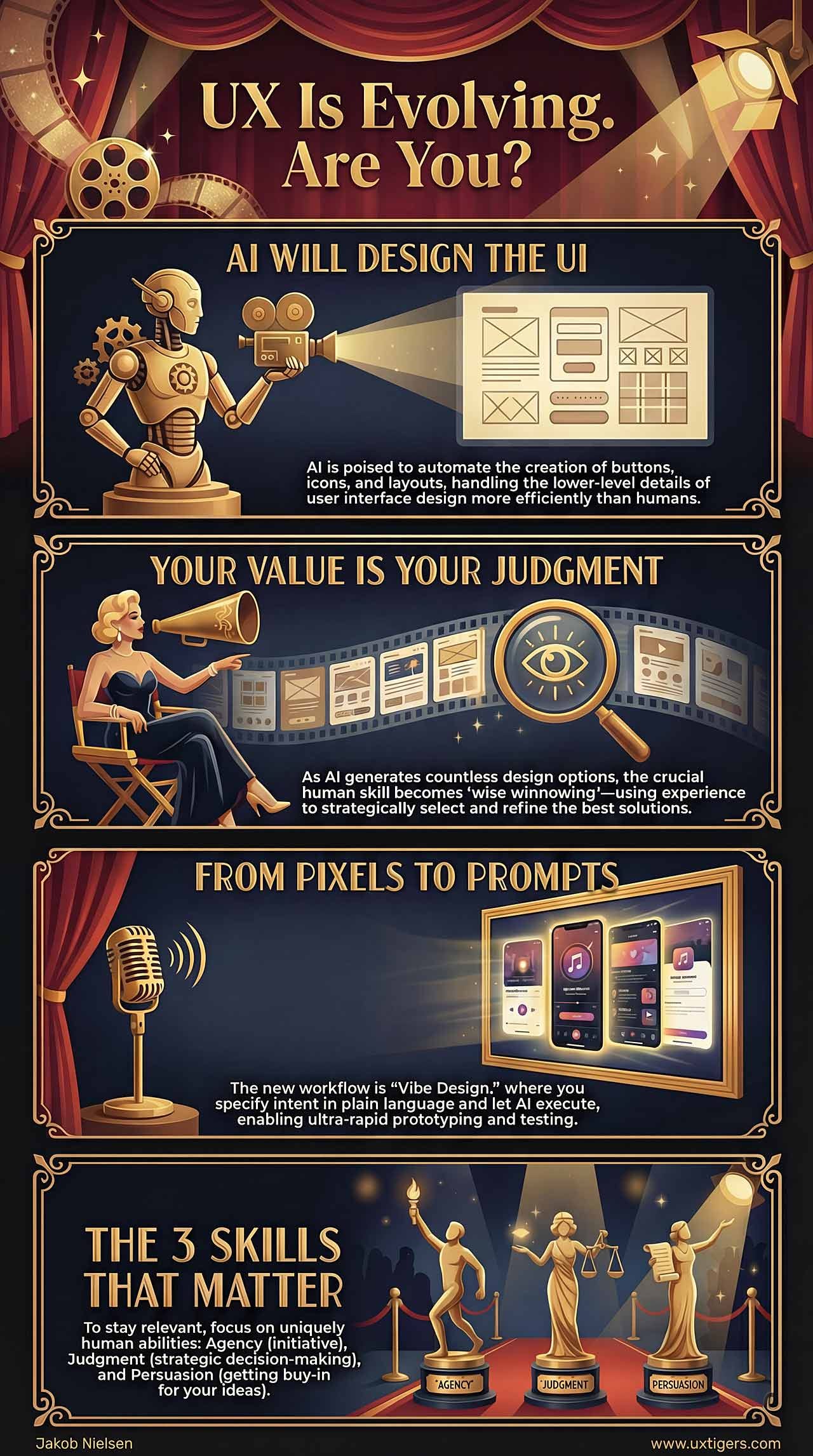

AI merchandise have developed from enhancing classical consumer interfaces to turning into the first means for customers to interact with digital options and content material. This will imply the tip of UI design within the conventional sense, refocusing designers’ work on orchestrating the expertise at a deeper stage, particularly as AI brokers do extra of the work.

(Additionally as a music video)

Over the upcoming decade, AI-provisioned intelligence will change into virtually free, and immediately accessible. AI received’t simply help professionals, it should take over a lot of the work as a packed immediate service supplier. Welcome to the age of boundless talent scalability, the place providers remodel into software program and economies develop past human creativeness.

All in good enjoyable. The motion determine was first created as a nonetheless picture utilizing ChatGPT’s native picture mannequin, then animated with Kling 1.6. The video model appears extra tangible than the nonetheless picture, because of the 3D animation. I’m happy that computer systems and AI may help people have enjoyable and revel in themselves. It doesn’t at all times need to be so severe.

(Additionally as a music video)

AI transforms software program growth and UX design by pure language intent specification. This shift accelerates prototyping, broadens participation, and redefines roles in product creation. Human experience stays important for understanding consumer wants and making certain high quality outcomes, balancing technological innovation with skilled perception.

(Additionally as a music video)

The UX subject is in transition. This avatar video explains Jakob Nielsen’s predictions for the 6 themes for the consumer expertise occupation in 2025.

The important thing variations between Consumer Interface (UI) and Consumer Expertise (UX). UI is the tangible parts customers work together with, akin to buttons, menus, and layouts, which might be graphical, gestural, or auditory. UX, nevertheless, encompasses a consumer’s general emotions, interpretations, and satisfaction when utilizing a product. Though UX is formed by UI, it’s in a roundabout way designed however influenced by UI decisions, product names, and advertising and marketing messages. In the end, AI is predicted to automate most UI design duties, enabling human designers to focus extra strategically on enhancing UX.

Jakob Nielsen’s sixth usability heuristic, “Recognition Reasonably than Recall,” advises designers to reduce customers’ reminiscence load by making key info and choices seen or simply accessible. In different phrases, interfaces ought to permit customers to acknowledge parts (by seeing cues or prompts) as an alternative of forcing them to recall info from reminiscence.

UX professionals solely have just a few years to adapt earlier than AI reshapes the whole lot. Legacy abilities like wireframing have gotten out of date as AI handles technical duties. The long run calls for uniquely human skills: Company (taking initiative), Judgment (selecting from AI choices), and Persuasion (promoting concepts). Greatest be a part of an AI-native firm and abandon resistance to alter. This transition interval isn’t doom; it’s alternative. We’re actively inventing UX’s future by experimentation. Time to commerce legacy experience for future relevance.

Stop errors over restoration. Design with constraints (e.g., date pickers, dropdowns, sensible defaults), not free textual content. Use inline validation and autocomplete as invisible guides. Verify solely damaging actions. Scale friction to danger: nudges for minor points, velocity bumps for irreversible ones. Prevention cuts help prices and builds consumer confidence by invisible craftsmanship.

(Additionally as an explainer video)

AI is surging from early-adopter novelty to on a regular basis utility. But this transition is uneven throughout international locations and use instances. In some instances, AI has already crossed Geoffrey Moore’s famed chasm between visionary early adopters and the pragmatic early majority, whereas in different instances, AI diffusion is way slower.

Obtainable as each a music video and an avatar explainer. (I’m notably happy with the music model: this can be a case the place the tune lyrics clarify the story higher than the prose narration.)

The human getting older course of is detrimental to the mind, notably degrading fluid intelligence, which peaks round age 20. Fortunately, crystallized intelligence continues to extend, and the mix of the 2 implies that artistic information employees are at their greatest round age 40. Then, downhill! AI modifications this age-old image however augmenting older artistic professionals’ declining fluid intelligence, permitting them to make full productive use of their superior crystallized intelligence. AI extends the artistic careers of older professionals by a number of a long time.

(Sadly, my evaluation of how AI helps seniors wasn’t highly regarded, however I believe it’s essential sufficient that I’ll checklist it right here anyway! It’s my publication and my guidelines. YouTube’s analytics point out that the majority of my viewers is within the 25–44-year vary, which can be why they don’t care concerning the consumer expertise of older adults. Simply you wait, mind decay is coming for you ahead of you suppose.)

There are lots of particulars in these movies, however my important message could be summarized on this infographic (NotebookLM):

Jakob Nielsen, Ph.D., is a usability pioneer with 42 years expertise in UX and the Founding father of UX Tigers. He based the low cost usability motion for quick and low cost iterative design, together with heuristic analysis and the 10 usability heuristics. He formulated the eponymous Jakob’s Regulation of the Web Consumer Expertise. Named “the king of usability” by Web Journal, “the guru of Internet web page usability” by The New York Occasions, and “the subsequent smartest thing to a real time machine” by USA At this time.

Beforehand, Dr. Nielsen was a Solar Microsystems Distinguished Engineer and a Member of Analysis Workers at Bell Communications Analysis, the department of Bell Labs owned by the Regional Bell Working Corporations. He’s the writer of 8 books, together with the best-selling Designing Internet Usability: The Observe of Simplicity (revealed in 22 languages), the foundational Usability Engineering (29,538 citations in Google Scholar), and the pioneering Hypertext and Hypermedia (revealed two years earlier than the Internet launched).

Dr. Nielsen holds 79 United States patents, primarily on making the Web simpler to make use of. He acquired the Lifetime Achievement Award for Human–Pc Interplay Observe from ACM SIGCHI and was named a “Titan of Human Elements” by the Human Elements and Ergonomics Society.

· Subscribe to Jakob’s publication to get the total textual content of recent articles emailed to you as quickly as they’re revealed.

· Comply with Jakob on LinkedIn.

· Learn: article about Jakob Nielsen’s profession in UX

· Watch: Jakob Nielsen’s first 41 years in UX (8 min. video)

Leave a Reply