On May 8, Abu Dhabi–based investment giant International Holding Company (IHC) unveiled Aiden Insight 2.0, the upgraded version of its AI-powered Board Observer. Built with executive AI specialist Aleria, the system first launched last year, was deployed during IHC’s Q1 2025 results meeting, where it delivered strategic recommendations in real time.

Billed as the UAE’s first fully sovereign, on-premise AI board observer, Aiden Insight 2.0 avoids external cloud services to ensure data sovereignty, a priority for organisations dealing with sensitive financial and strategic information. The platform includes a live newsroom that tracks IHC’s performance and global market shifts, while an “AskIHC” feature lets directors instantly pull up pre-analysed financial and operational metrics. As a Large Action Model, it synthesises insight across IHC’s broad portfolio, offering a unified view of risks and performance drivers.

In a similar move, sovereign wealth fund ADQ introduced Q in March, a voice-enabled AI board advisor that draws on ADQ’s knowledge base to offer strategic input during board discussions. Combining multiple AI models, Q is designed to support governance and sharpen decision-making.

From pilots to a seat at the table

These examples signal the trend of AI systems increasingly sitting alongside human directors, reshaping board governance and amplifying shareholder value. However, the idea of putting AI in the boardroom isn’t new.

Eleven years ago, Hong Kong–based Deep Knowledge Ventures made headlines by appointing an algorithm it called Vital (Validating Investment Tool for Advancing Life Sciences), to its board. Designed to scan vast datasets and recommend investments, Vital has since evolved into a second-generation system that continues to inform the firm’s board decisions.

That said, what was once a curiosity is now gaining momentum with boards worldwide increasingly turning to AI for decision support, scenario planning, and risk oversight.

According to an October 2025 analysis by the Institute of Management Development (IMD), boards are using AI to parse complex datasets, run simulations, and generate predictive models on everything from macroeconomic shifts to stress tests. AI is also enhancing compliance oversight, with real-time dashboards flagging potential risks such as cybersecurity alerts, regulatory gaps, supply-chain vulnerabilities, or geopolitical disruptions, far earlier than traditional quarterly reporting cycles allow.

Generative AI (GenAI) is streamlining the governance grind as well. Boards now use it to prepare meeting materials, summarise governance documents, benchmark performance, draft minutes, and distill regulatory or market intelligence. As AI becomes central to corporate operations and strategy, many boards are also formalising AI governance itself, setting up dedicated committees, codifying ethics frameworks, and tightening oversight protocols.

When AI joins the board, who thinks better?

In a November 5 Harvard Business Review article, the authors spotlighted an experiment by Wharton’s Mack Institute and INSEAD’s Center for Corporate Governance that directly compared the performance of AI boards with human ones.

Participants in INSEAD’s Advanced Board Program spent two hours running a simulated board meeting for a fictional company, Fotin. The Mack Institute created an LLM-agnostic, multi-agent platform to run the same deliberations in parallel, using identical materials and best-practice board protocols.

The results revealed that the AI board earned top scores (3 points) on five criteria from both experts and LLMs. There were minor shortcomings such as the LLMs rating decision implementability and director preparation slightly lower (2.7 and 2.9), while experts giving depth of exploration a 2.7. Human boards scored markedly lower across all eight criteria, with AI and expert evaluators consistently harsher than the self-assessments of human participants.

Nasdaq shows the way

One of the most advanced deployments comes from Nasdaq Boardvantage, which handles some of the world’s most sensitive board information, from M&A strategy to executive compensation. Nasdaq says its AI tools deliver accuracy rates of 91–97%, far above industry norms, and work across spreadsheets, visualisations, and unstructured documents. Built with Microsoft Foundry, using Azure OpenAI, Azure Document Intelligence, and secure cloud infrastructure, the system automates summarisation and governance workflows while preserving confidentiality.

The impact is significant: governance teams save more than 100 hours a year, directors report up to 25% less prep time, and reading loads have dropped as much as 60% thanks to concise, high-fidelity summaries. Accuracy in AI-generated summaries and minutes has consistently hovered between 91% and 97%, allowing directors to focus on strategy rather than sifting through hundreds of pages, claims Microsoft.

Nasdaq is now developing an AI Board Assistant that will help prepare agendas, flag emerging risks, and surface insights from past meetings—operating entirely within a secure, tenant-specific environment. The direction of travel is clear: AI is no longer just sitting at the edge of the boardroom—it’s becoming integral to how boards govern, oversee risk, and make decisions.

But many boards still aren’t ready

A 2024 Deloitte Global survey revealed a striking gap between AI ambition and boardroom reality: 45% of C-suite leaders said AI hadn’t made it onto their board’s agenda at all, and only 14% discussed it at every meeting. Nearly 46% of directors were worried that their boards weren’t spending enough time on AI.

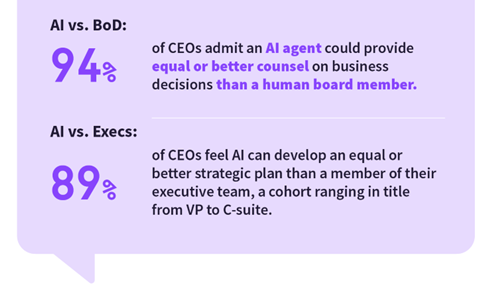

The scenario, however, is changing. According to The Global AI Confessions Report: CEO Edition—a Harris Poll survey for Dataiku released in March—three-quarters of CEOs fear they could lose their jobs within two years if they fail to deliver measurable AI-driven results.

Source: Harris Poll survey findings

Sixty-three percent say their boards now demand AI outcomes, and 96% believe those demands are justified. AI is becoming a CEO-level competency: 31% of CEOs expect AI strategy to be a top hiring criterion within four years, and 60% say it will be mandatory within six.

Evidence suggests the pressure is warranted. A 2025 MIT study found that organisations with digitally and AI-savvy boards outperform peers by 10.9 percentage points in return on equity, while those without trail their industry by 3.8%.

Yet board governance hasn’t kept pace. In a December 4 article, “The AI Reckoning: How Boards Can Evolve”, McKinsey notes that although 88% of companies use AI in at least one business function, most boards still lack clarity on how AI fits into corporate strategy or transformation goals. Without alignment between directors and management, oversight risks becoming either superficial or paralysing. McKinsey urges directors to build personal AI fluency—using tools for meeting prep, public research, and, with legal approval, analysis of confidential materials.

Concerns remain real

But enthusiasm can obscure real risks. Over-reliance on AI exposes boards to bias, hallucinations, privacy breaches, regulatory missteps, and ethical pitfalls. A March paper from the Stanford Graduate School of Business, The Artificially Intelligent Boardroom, warns that adoption will force difficult questions about the division of responsibilities between boards and management. The Harris Poll findings highlight another danger: 94% of CEOs believe employees are using GenAI tools without approval. This “shadow AI” creates unchecked compliance, privacy and data-security vulnerabilities.

With regulation still evolving, McKinsey says boards must probe resilience, observability, and explainability to ensure systems are stable, traceable, and can be corrected.

Companies are responding. Nasdaq, for instance, says it has adopted responsible AI principles built around transparency, fairness, accessibility, reliability, responsible data management, privacy, security, accountability and oversight.

Meanwhile, just as AI bots begin attending board meetings, companies are rethinking who sits at the table. Boards are increasingly seeking directors with AI, tech, or data-literacy backgrounds, or at least more AI-aware non-executives, to guide strategy and risk oversight. As McKinsey concludes: “Directors don’t need to be data scientists, but they do need enough understanding of AI to grasp both the opportunities and the risks it creates.”

Leave a Reply