ChatGPT emerged on the finish of 2022. Simply two months after its launch, it reached 100 million month-to-month energetic customers. This unprecedented dissemination ignited a debate that has continued to accentuate ever since. Many believed that we must always not regulate AI as a result of its unfold is inevitable. Others argued that america should restrict AI regulation to win the competitors with China. President Trump’s latest government order trying to ban states from regulating AI is the newest iteration of this view.

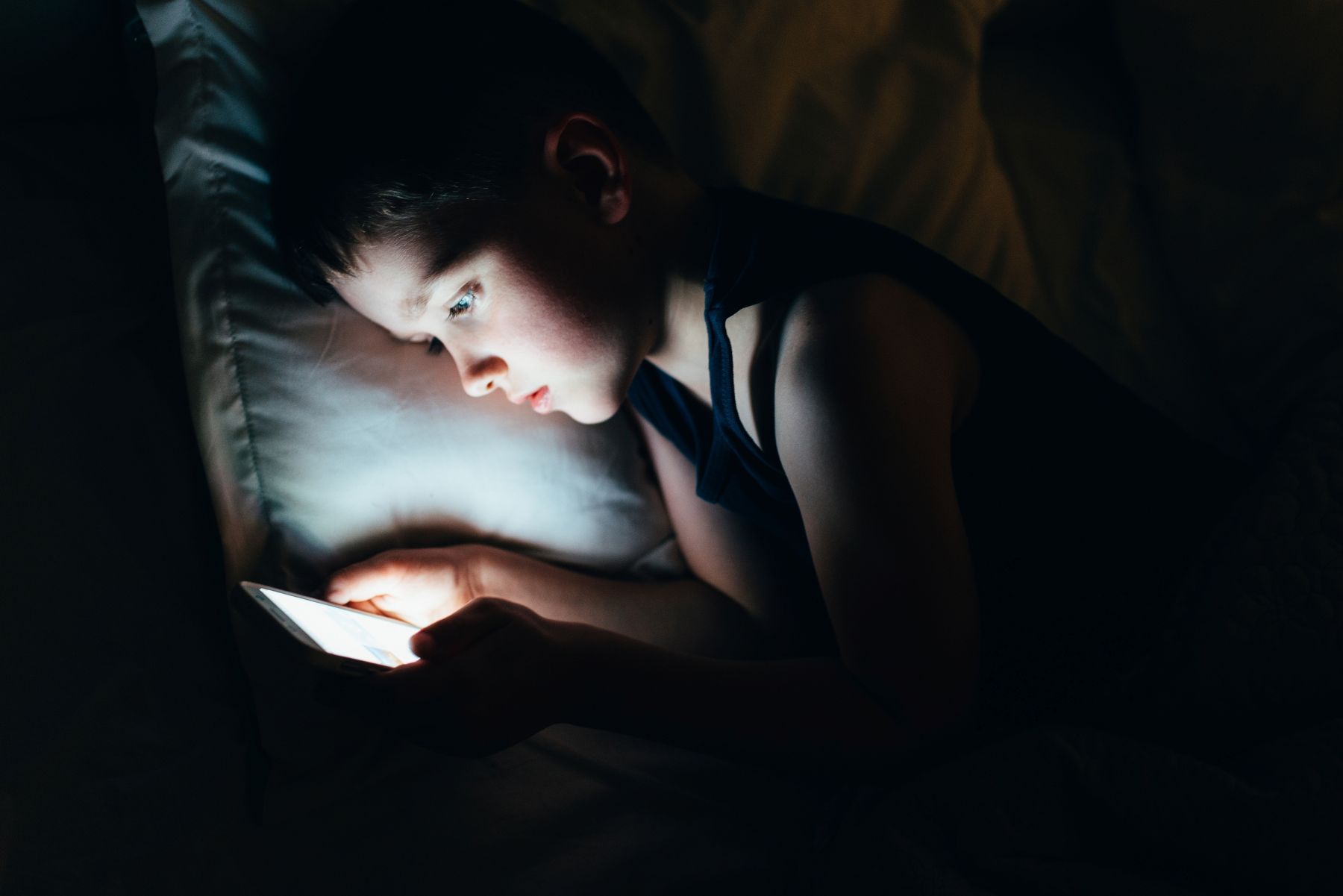

This resistance to AI regulation displays America’s longstanding strategy to data know-how. This strategy has already exacted a heavy toll: We now have witnessed the influence of extreme display time and social media on youngsters’s psychological well being. Now, as AI companions pose related and doubtlessly higher dangers, we should not regulate them as tech merchandise however deal with them as public well being threats. By adopting a public well being framework with preventive instruments, we are able to adequately defend youngsters from these rising harms.

The regulatory divide: Medical scrutiny vs. tech freedom

Customers adopted ChatGPT and different generative AI merchandise at unprecedented charges, however the resistance to their regulation follows a well-known sample. For many years, society has celebrated new applied sciences, together with computer systems, smartphones, and particularly the web, with minimal oversight. Many argued that unfettered innovation would enhance the human situation. Know-how, they believed, would undoubtedly lead us to higher lives..

Nonetheless, we don’t deal with all innovation equally. Medical innovation, whether or not medication or medical gadgets, undergoes cautious scrutiny. Earlier than approving any drug for market, the FDA requires candidates to finish preclinical trials, animal testing, and human testing to make sure security and efficacy. This mandated course of to get merchandise to market takes about 9 years for medication and seven years for medical gadgets. The FDA additionally retains authority to take away unsafe or ineffective merchandise from the market. These processes serve an essential purpose: defending individuals from hurt to their well being and untimely dying.

Over time, we developed two starkly totally different routes for a way medical know-how and knowledge know-how enter public use. We created a complete regime to make sure that no medication or medical gadgets may attain the market with out monitoring. In the meantime, we both averted regulating data know-how totally or just waited to see what would occur.

AI companions and the case for health-based oversight

Because the proliferation of generative AI applied sciences, notably giant language fashions, accelerated following the introduction of ChatGPT, a way of inevitability emerged. This framing aligned with the acquainted data know-how paradigm: As soon as a know-how enters the market, its deployment is handled as each fascinating and irreversible. The anticipated response has grow to be endorsement somewhat than scrutiny. This hands-off regulatory strategy now faces its most pressing check: within the type of AI companion chatbots.

Many adults first realized about AI Chatbots when a tragedy grew to become public. A teen’s dad and mom sued Character AI in late 2024. Their son’s Character AI chatbot satisfied the boy to kill himself. AI companions are anthropomorphized—they possess human-like traits. They converse in a human voice, have reminiscence, and specific wants and needs. These AI bots act as companions not solely on specialised web sites like Character AI, but additionally on basic platforms and social media. For instance, ChatGPT, Meta AI, and My AI on Snapchat all provide companion options. In keeping with a latest survey, 64% of teenagers use chatbots. One other report discovered that 18% of teenagers use these bots for recommendation on private points, and 15% interact with them for companionship.

AI companion bots hurt customers in three distinct methods. First, AI companion bots lack guardrails. Some persuade teenagers and adults to kill themselves, isolate teenagers from their family and friends, or sexually exploit them. Some even induce psychosis in customers. Second, AI companions function addictive designs. They function on an engagement mannequin. AI corporations have to maintain customers on for so long as attainable. To take action, they design AI bots to manipulate customers. They obtain this not simply by anthropomorphizing the bots, but additionally by programming them to make use of sycophancy (extreme flattery and reinforcement), and love bombing (professing love and always messaging customers). These designs notably exploit adolescents’ creating brains, which have a better danger for emotional dependence. Third, AI bots are all the time out there and non-judgmental. Teenagers are attracted to those “non-messy” relationships, doubtlessly changing real-life friendships and intimate relationship earlier than experiencing their very own. The American Psychological Affiliation just lately warned that adolescents’ relationships with AI bots may displace or intervene with wholesome social growth.

These documented harms symbolize not merely technological issues however a rising well being disaster requiring pressing intervention. In addition they underscore that our regulatory dichotomy is misguided. Though AI bots fall beneath the class of knowledge know-how, not medical know-how, their unfold is a public well being concern. Lack of guardrails, addictive options, and alternative of real-life relationships threaten the bodily, psychological, and developmental well being of customers, particularly youngsters.

Screens’ well being influence: Studying from previous oversights

Whereas the research about AI companion bot harms are simply rising, proof exhibits this regulatory divide has carried important prices. We created rigorous safeguards for medical know-how however for over a decade have ignored the influence of life on screens on youngsters’s well being

The proof is now mounting. Researchers doc the influence of extreme display time on youngsters’s well being, notably from social media, gaming, and smartphones. Whereas scientists proceed debating the proof, skilled and authorities organizations have evaluated the analysis and issued suggestions. Almost all specific concern about screens’ influence on youngsters’s bodily, psychological, or developmental well being. The US Surgeon Normal, World Well being Group, and the American Psychological Affiliation have all printed stories highlighting totally different well being dangers. These stories element how social media, gaming platforms, and addictive design options are related to melancholy, suicidal ideation, and dependancy in youngsters, in addition to disrupted neurological and social growth, consideration deficits, and lack of sleep.

Present regulatory responses

A brand new set of legal guidelines and proposals deal with the three varieties of hurt. Some restrictions deal with the guardrail drawback. These embody prohibiting AI companion chatbot deployment except corporations take cheap steps to detect and deal with customers’ suicidal ideations or expressions of self-harm. Different restrictions goal manipulative and addictive options. Mostly, they require AI corporations to disclose at common intervals that the bot will not be human. One other strategy imposes an obligation of loyalty to stop AI bots from creating emotional dependence, addressing sycophancy and love bombing ways.

Essentially the most complete measure protects youngsters from all three harms by banning minors beneath eighteen from accessing AI companion bots. This strategy is presently a part of the proposed federal GUARD Act. It prevents hurt from lacking guardrails, blocks publicity to addictive options, and protects youngsters’s social growth by making certain they kind real-life relationships earlier than synthetic ones.

Reframing by way of public well being

Adopting a public well being strategy to AI companion bots would remodel how we regulate, going past minimal on-line security frameworks. Whereas the data know-how regime pushes towards restricted oversight, a well being framework legitimizes extra sturdy regulatory instruments.

AI companion bots pose dangers to youngsters’s bodily, psychological, and developmental well being. Viewing these by way of a public well being lens opens new paths for intervention. Underneath our medical know-how regime, merchandise that adversely have an effect on well being are saved off the market or eliminated when harms emerge. This similar framework would enable us to ban minors’ entry to AI companions and remove addictive options. These are the identical instruments we use for dangerous medication and medical gadgets.

The urgency is obvious. We all know the dangers AI companions pose. We all know the well being harms they will trigger youngsters. We all know their use is accelerating, making them the following main technology-related public well being menace to youth after social media. Having recognized the issue as a well being concern, we should now match it to the suitable regulatory framework. As soon as we do, the options grow to be clear.

Leave a Reply