No less than that’s what Chris Urmson, chief government of autonomous-vehicle software program maker Aurora Innovation, insists.

Related logic applies in a totally completely different area: authorized arbitration. Bridget Mary McCormack, former chief justice of the Michigan Supreme Courtroom and now CEO of the American Arbitration Affiliation, thinks her group’s new AI Arbitrator will settle some disputes higher than most people would.

Insurance coverage firms have been doing algorithmic decision-making since earlier than it was known as synthetic intelligence. Alongside the best way, they’ve been sued for bias, and needed to replace their means of doing enterprise. Early on, regulators made it clear that their AI-based programs can be held to the identical requirements as human ones. This has pressured many insurance coverage firms to make their algorithms “explainable”: They present their work, quite than hiding it in an AI black field.

In contrast to lots of the hype males who say we’re mere years away from chatbots which are smarter than us, the folks making these decision-making programs go to nice lengths to doc their “thought” processes, and to restrict them to areas the place it may be proven they’re succesful and dependable.

But many people nonetheless favor the judgment of a human.

“You go to a court docket, and a choose decides, and also you don’t have any approach to see the best way her mind labored to get to that call,” says McCormack. “However you possibly can construct an AI system that’s auditable, and reveals its work, and reveals the events the way it made the selections it made.”

We’re on a philosophical fence, she says: We tolerate the opacity of human decision-making regardless of years of analysis displaying our personal fallibility. But many people aren’t able to consider an automatic system can do any higher.

The auditor

Persons are at the very least as involved about AI as they’re enthusiastic about it, says the Pew Analysis Middle. And rightly so: The lengthy historical past of computer-based decision-making hasn’t precisely been a victory march.

Courtroom programs’ sentencing algorithms proved racially biased. Trainer-evaluation software program didn’t yield accountability.

“Once I wrote my ebook ‘Weapons of Math Destruction’ 10 years in the past, I made the purpose, which was actually true on the time, that numerous these programs have been being put into place as a approach to keep away from accountability,” says Cathy O’Neil, who’s an algorithmic auditor.

View Full Picture

However these early tries have been essential, even when they failed, she provides, as a result of as soon as a course of is digitized, it generates unprecedented quantities of knowledge. When firms are pressured at hand over to regulators, or opposing counsel, inside information of what their algorithms have been as much as, the result’s a form of involuntary transparency.

O’Neil probes decision-making software program to find out whether or not it’s working as supposed, and whom it is perhaps harming. On behalf of plaintiffs who is perhaps suing over something from monetary fraud to social-media hurt, she examines piles and piles of output from the software program.

“One of the thrilling moments of my job is to get the info,” she says. “We are able to see what these folks did they usually can’t deny it—it’s their knowledge.”

She focuses on the impacts of an algorithm—the distribution of the selections it makes, quite than the way it arrived at these selections—which is why her strategies have modified little whilst LLMs and generative AI have taken over the sector.

O’Neil is hopeful about the way forward for holding firms accountable for his or her algorithms, as a result of it’s one of many few areas of bipartisan settlement remaining within the U.S. At a current Wall Road Journal convention, Sen. Lindsey Graham (R., S.C.) mentioned that he believes tech firms needs to be held liable for any harms their programs may trigger.

Confirm, then belief

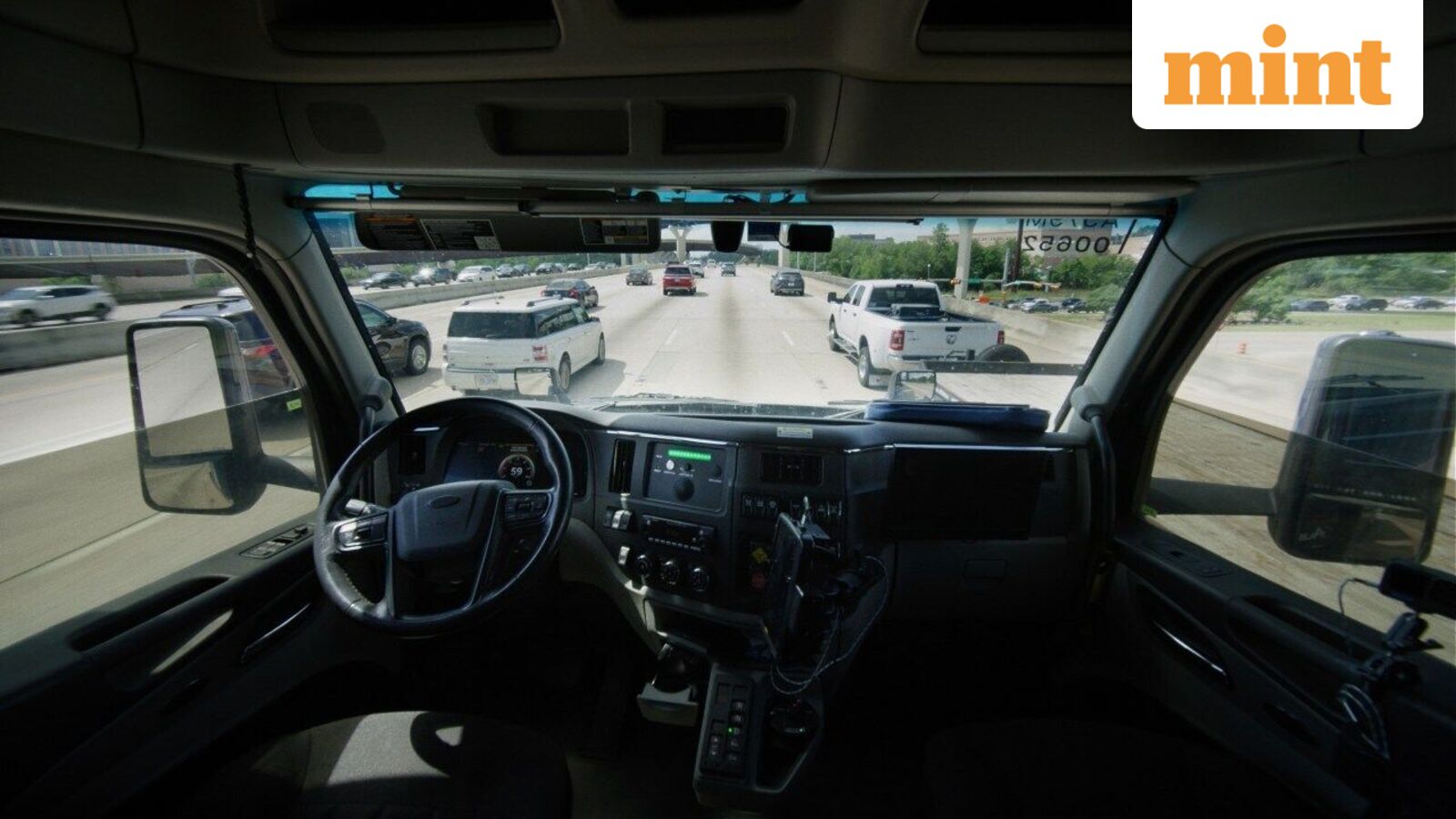

In 2023, engineers at Aurora, the autonomous-truck software program maker, checked out each deadly collision on Interstate 45 between Dallas and Houston from 2018 by way of 2022. Based mostly on police experiences, the staff created simulations of each a type of crashes, after which had their AI, generally known as Aurora Driver, navigate the simulation.

“The Aurora Driver would have averted the collision in each occasion,” says Urmson, the CEO.

But the corporate additionally confronted a current setback. Final spring, Aurora put vehicles on the street hauling freight in Texas with no driver behind the wheel. Two weeks later, on the request of one among its producers, Aurora needed to deliver again human observers. The corporate has emphasised that its software program continues to be totally liable for driving, and expects a few of its vehicles will likely be utterly unmanned once more by the center of 2026.

At launch, the American Arbitration Affiliation is simply providing its AI Arbitrator for a particular form of case for which at present’s AI is well-suited: these determined solely by paperwork. The system supplies transparency, explainability and monitoring for deviations from what human specialists may conclude in a case.

But even if skilled arbitrators, judges and regulation college students have discovered AI Arbitrator dependable in testing, nobody has opted to make use of it in a real-life case since its debut final month. McCormack says this can be due to its novelty and practitioners’ lack of familiarity. (Each events in a dispute should agree to make use of the bot initially.)

For these asking the general public to belief their AIs, it doesn’t assist that programs based mostly on associated know-how are inflicting hurt in methods all of us hear about every day, from suicide-encouraging chatbots to picture turbines that devour jobs and mental property.

“For those who simply ask folks within the summary, ‘Why wouldn’t you belief AI to make your disputes?’ they robotically suppose, ‘Effectively, why would I throw my dispute into ChatGPT?’ ” says McCormack. “And I’m not recommending that.”

In some areas corresponding to human sources, even AI trade professionals argue that human emotion is essential—and AI decision-making is perhaps too dispassionate.

However accountable growth might begin to steadiness the scales towards the detrimental features of AI, so long as we are able to confirm that these programs do what their makers declare. Think about a future wherein a busy stretch of freeway has far fewer fatalities. Perhaps even zero.

“It’s straightforward to get misplaced within the statistics and the info,” says Urmson, reflecting on the horrific—and avoidable—accidents his staff has studied. “However whenever you begin to consider the results for folks’s lives, it’s an entire completely different factor.”

Write to Christopher Mims at christopher.mims@wsj.com

Leave a Reply