What if synthetic intelligence appears at your language — and sees nothing?

For thousands and thousands of individuals, this isn’t a metaphor. It’s the every day actuality of interacting with AI methods that merely don’t perceive the language individuals use to take part in public life.

As we speak’s most superior AI fashions carry out impressively in English and a handful of dominant languages, whereas a whole bunch of others stay virtually fully invisible. And when AI can not perceive a language, it can not reliably inform, defend or empower the individuals who communicate it — particularly in civic and democratic areas the place accuracy issues essentially the most.

This isn’t a distinct segment equity challenge however relatively a rising democratic fault line.

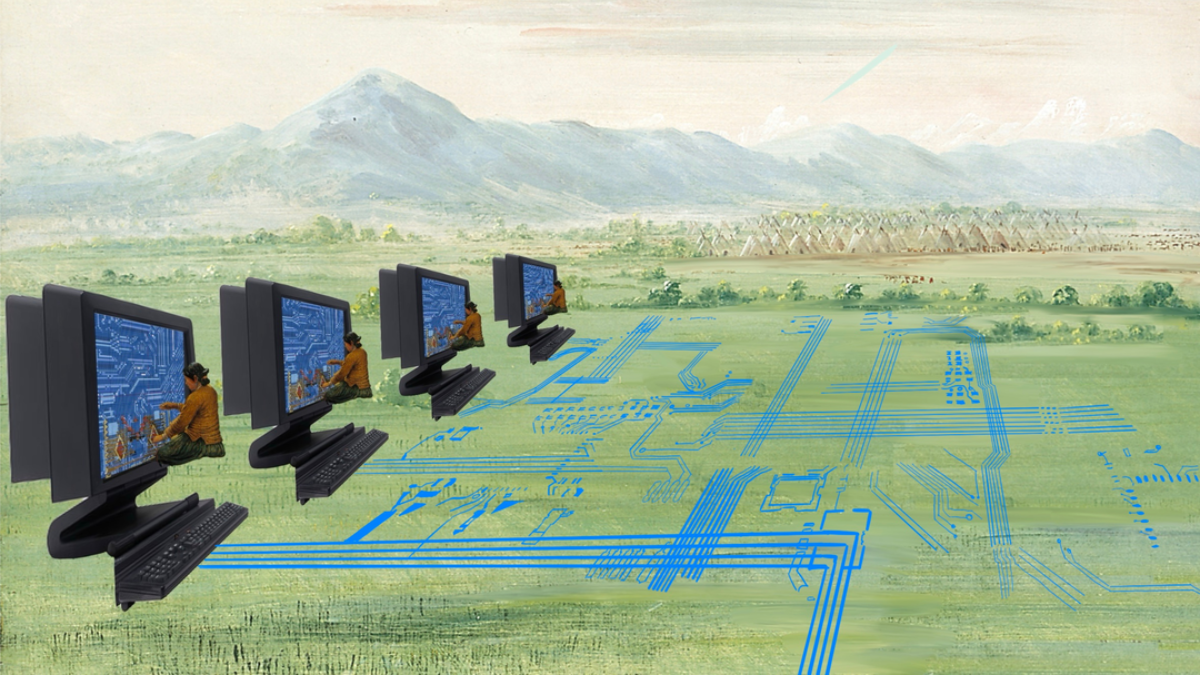

A brand new divide

For many years, the digital divide was outlined by entry to web connectivity. However a brand new divide is now rising throughout linked societies: the divide between the languages AI can “see” and people it could’t. And it’s widening quick.

The newly launched SAHARA benchmark evaluated 517 African languages throughout a spread of AI duties. The outcomes had been stark: English performs close to the highest of each process, whereas many African languages — together with these spoken by thousands and thousands, reminiscent of Fulfulde, Wolof, Hausa, Oromo, and Kinyarwanda — persistently cluster among the many lowest performers throughout reasoning, era, and classification duties.

The discrepancy seems to have little to do with linguistic complexity. The SAHARA benchmark attributes these gaps to uneven information availability — languages with sparse or incomplete digital datasets persistently rating decrease, even when they’re linguistically simple. This displays a long time of underinvestment in datasets, documentation and digital infrastructure. When a mannequin has no coaching information for a language, they don’t degrade gracefully. Analysis on multilingual AI reveals markedly larger hallucination charges in low-resource languages, together with well-documented tendencies to amplify stereotypes and misclassify dialect or culturally particular content material.

And the problem shouldn’t be restricted to spoken languages. A current report by the European Union of the Deaf paperwork what number of AI instruments for signal languages misread grammar, flatten cultural nuance and misread even primary expressions. This typically occurs as a result of they had been developed with out significant Deaf neighborhood involvement, reinforcing the sense that accessibility is one thing bolted on after the very fact relatively than a democratic requirement.

Throughout each spoken and signed languages, the proof factors towards a constant sample: AI methods battle most with languages that obtain the least political and financial funding — even when these languages serve thousands and thousands. And that has direct implications for democratic fairness.

When AI doesn’t perceive you, civic security falls

A lot of the dialog round AI security focuses on precept alignment, mannequin robustness and misuse prevention. However safeguards to that impact solely perform when a mannequin can perceive the enter it receives.

Actual-world circumstances might reveal among the penalties. Forward of Kenya’s 2022 elections, a International Witness and Foxglove investigation discovered that Fb accredited advertisements containing express hate speech in each English and Swahili — content material that ought to not have handed moderation. The report doesn’t establish the explanation for the lapse, however Meta has repeatedly emphasised that AI handles a considerable share of its moderation workload. The incident due to this fact probably displays a broader sample: security mechanisms break down most sharply in languages the place AI methods have restricted underlying assist. In Nigeria, current analysis demonstrates that broadly used hate-speech methods dramatically overestimate their efficiency when utilized to political discourse involving code-switching, Pidgin and different native language patterns.

Multilingual security analysis persistently reveals that content material moderation, toxicity detection and misinformation classification degrade sharply in low-resource languages. In apply, this implies hate speech or election-related incitement goes undetected in regional or minority languages; benign civic content material is misclassified as dangerous, suppressing respectable political speech; and security filters that work reliably in English collapse in languages with sparse coaching information.

Evaluations of LLM-based moderation methods attain comparable conclusions: when fashions are requested to evaluate toxicity throughout languages, settlement and reliability drop as one goes from high-to-low useful resource languages outlined by how a lot coaching information exist. These breakdowns form the data atmosphere for thousands and thousands of audio system.

The stakes transcend platform moderation. The World Financial institution’s Generative AI Foundations report underscores how AI instruments utilized in well being, agriculture and training produce inconsistent — and generally harmful — outputs when prompts are translated into low-resource languages. As public establishments combine AI into service supply, these failures develop into governance failures, not simply technical glitches.

And in elections, the dangers compound. Analysis reveals that election-related disinformation campaigns already goal communities whose languages obtain the weakest moderation and security protections. Public-facing chatbots and “multilingual” portals, typically powered by brittle translation methods, have additionally been proven to routinely misread queries in lower-resource languages, a batten that may result in incorrect steering on advantages, rights or political course of. When a citizen receives deceptive civic data, the impact is indistinguishable from disenfranchisement.

Linguistic inequity in AI shouldn’t be a peripheral equity concern. It determines who has entry to correct data, who can contest choices and who can meaningfully take part in democratic life.

A governance blind spot with democratic penalties

Governments are starting to sound the alarm. South Africa, throughout its G20 digital economic system presidency, overtly warned that linguistic inequity in AI dangers excluding billions of individuals from the digital economic system. UNESCO’s suggestion on the ethics of AI underscores the rules of inclusiveness and non-discrimination. But main world governance frameworks nonetheless deal with multilingual functionality as optionally available. For example, the European Union AI Act — one of many world’s most complete governance frameworks — doesn’t require builders to report or assure mannequin efficiency throughout the languages spoken by affected customers, besides in restricted consumer-facing circumstances. Multilingual efficiency stays largely advisory relatively than necessary — a stretch-goal relatively than a baseline requirement.

The deeper challenge is that linguistic fairness continues to be considered a technical inconvenience as an alternative of a governance precedence. But the flexibility of AI methods to grasp various languages is immediately tied to democratic participation, the fitting to data and equitable entry to important companies.

Rising analysis on ethical reasoning reinforces this level: massive language fashions (LLMs) exhibit systematically completely different moral judgements throughout languages, with the most important deficiencies in low-resource ones. Security failures aren’t nearly toxicity, however about whose values and voices are heard by the system in any respect.

Make linguistic fairness a tough security requirement

If AI is to assist relatively than undermine democratic participation, governments and platforms should cease treating multilingual functionality as a bonus characteristic. Linguistic fairness have to be understood as a security precept in its personal proper — as elementary as robustness, transparency and accountability.

Policymakers and regulators can transfer this from rhetoric to apply by:

- Mandating language-specific efficiency reporting: Mannequin playing cards and security evaluations ought to disclose accuracy and security metrics for each language the system claims to assist, particularly when coping with high-stakes duties like moderation and civic data. If a mannequin can not meet minimal thresholds, it shouldn’t be accredited for these purposes.

- Constructing language datasets as public infrastructure: Investments in digital public infrastructure should embody long-term assist for datasets for indigenous, minority, regional and signed languages. These datasets must be handled as digital public items.

- Funding community-led dataset creation: Communities have to be compensated and empowered to guide dataset creation, annotation and analysis. No AI system can meaningfully signify a language with out its audio system.

- Implementing multilingual efficiency in public procurement: Governments ought to refuse to acquire AI methods that can’t reveal reliability within the language the general public truly makes use of. Multilingual functionality must be a gatekeeping situation, not a checkbox.

- Recognizing linguistic bias as advert democratic danger: Election commissions and digital regulators ought to deal with linguistic inequity as a vector for misinformation and unequal civic entry.

No language must be digitally invisible

AI is changing into the interface between many individuals and the establishments that form their lives. But it surely can not serve humanity if it solely understands a fraction of it. To construct AI that’s genuinely secure and inclusive, we should align behind a easy dedication: no language must be invisible.

Linguistic fairness shouldn’t be peripheral to AI security. It’s a democratic crucial. And except regulators and platforms act now, AI will deepen the present divides — not due to malicious actors, however as a result of whole languages stay invisible to the methods which might be shaping the long run.

Leave a Reply